The number of organizations adopting Artificial Intelligence (AI) technologies has increased by more than 270% since 2015 according to Gartner. This technology represents an opportunity to address today’s economic, social and security challenges, but also an opportunity for cyber attackers who see the attack surface of their targets increase attack surface, and who can appropriate AI to use for malicious purposes. Elon Musk had already warned in 2017 about the dangers of unregulated AI, which he called then a “major risk” to our civilization.

What are the risks of AI-powered attacks and how is this technology being developed for defensive purposes by TEHTRIS?

AI attacks: what are the risks?

Although there have not been any large-scale AI attacks to date, this is a type of threat that will undoubtedly emerge in the near future. The use of AI will increase the speed, scale and sophistication of attacks carried out. For example, AI could be used to:

- Improving the recognition phases and the practice of social engineering: by scanning data related to a target individual (on social networks, in email correspondences etc.), AI would allow to usurp with precision the identity of the target. Tailor-made spearphishing emails using the tone, personality and language habits of the target will make the attack very difficult to detect.

- Improve the intrusion and attack phase by considering the context: AI-enabled malware can adapt to its environment to blend in and avoid detection, in particular by reproducing the behavior of a human user. After observation, it can learn in real time to target a specific endpoint or decide to self-destruct or pause. It will also be able to extract data unobtrusively by only targeting data of strategic interest.

- Corrupting AI systems by introducing erroneous data: one of the most current threats involves the risk against other AI systems. An attacker can access and alter the dataset used to “train” the AI algorithm, jeopardizing its proper functioning. Similarly, if an attack were to alter the incoming data, it could affect the AI’s response. These attacks aiming to make the AI system malfunction present serious risks, in the context of autonomous cars or industrial devices for example.

TEHTRIS CYBERIA : Responding to IA with IA

Cybersecurity experts today face multiple challenges: record number of attacks, lack of qualified personnel, cyber fatigue of SOC analysts, increased sophistication of activities… This continuously threatening environment already requires the use of AI in cyber defense that will have to adapt to AI attacks.

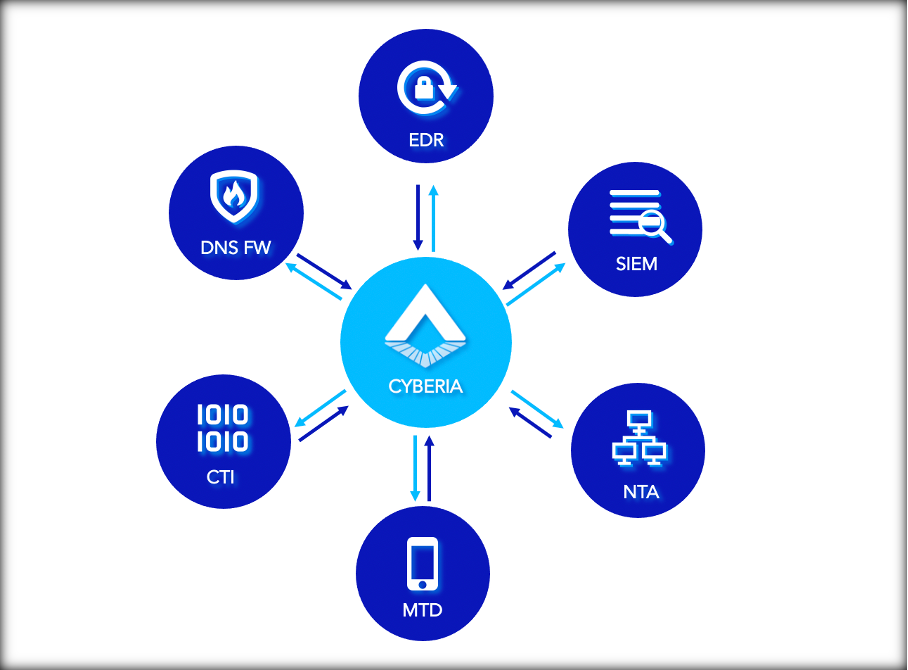

TEHTRIS develops its multi-modular artificial intelligence, CYBERIA, within the XDR to provide an autonomous and immediate response, based on a three-level analysis: file analysis, behavioral analysis and analysis of security alerts and events. The defensive AI identifies any irregular anomalous behavior, even if the deviant activity is “subtle”, i.e. difficult to detect, and stops the attacks. TEHTRIS SIEM, a security information and event management tool, collects massive data to feed security responses and refine user behavioral analysis (UEBA).

AI and Machine Learning used for cybersecurity purposes at TEHTRIS enable:

- Greater efficiency in preventing malware and any other type of attack from infiltrating a system by enabling a 24/7 response without human intervention.

- Detection of advanced threats – even previously unknown attack patterns – based on inherent characteristics, instead of signatures. For example, if a piece of software is built to quickly encrypt many files at once, AI and Machine Learning systems will be able to detect suspicious behavior. If the software takes steps to make itself undetectable and hides, that will also be another sign that it is not legitimate.

- Automated response, based on continuous learning from each incident, which allows for many repetitive tasks, such as responding to the high volume of minor risk alerts to prioritize incidents for analysts to handle.

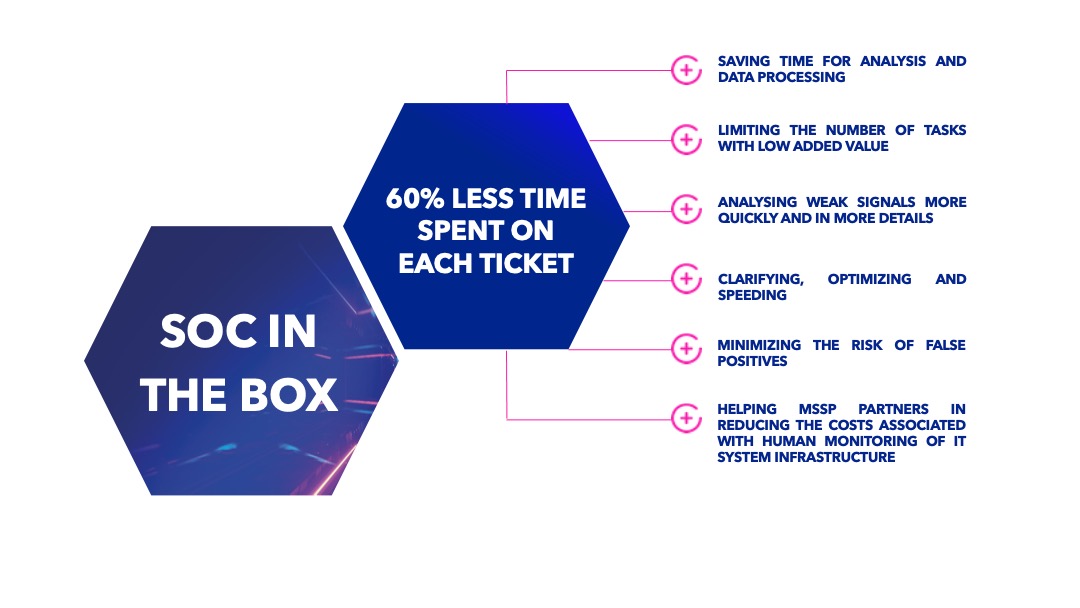

CYBERIA technology is implemented in all TEHTRIS products to prioritize alerts and optimize detection of malware and malicious behavior. CYBERIA is the result of a combination of cutting-edge techniques in Machine Learning, Deep Learning, Active Learning and Reinforcement Learning. It is powered by contextualized data from Big Data. Its ability to prioritize alerts sent to analysts has been valued in the framework of the French public initiative France Relance, which has selected the CYBERIA project “Soc in the box 2.0” as the winner of the Grand Défi Cyber in June 2021.

Challenges of using AI in Cyber

A major challenge presented by AI in cybersecurity lies in the notion of explainability. Indeed, AI systems are often compared to black boxes: the decision-making process is opaque due to the very nature of the technology which is constantly adapting and evolving. Thus, the notion of AIX (eXplainable Artificial Intelligence) aims to make this process more transparent – and therefore explainable – and to give visibility on the context that led to a decision taken by AI.

The aim of eXplainable Artificial Intelligence is therefore to:

- Contextualize an AI result for the analyst, who will be able to rely on it to assess its relevance or to enrich the response to a threat.

- Improve the AI system if biases are observed by the data scientist, as it would then be possible to identify where and why such biases have formed and thus take measures to mitigate them.

- A fortiori, identify an attack aiming to corrupt an AI system by controlling the quality of the data.

More generally, explainability is a growing field of research that aims, on the one hand, to improve AI models and the use of their predictions, and on the other hand, to generally increase the confidence of actors in this technology.

It should be noted that the ethical dimension of AI use is another important issue. Efforts to regulate the use of these technologies are being made both by international organizations (the European Commission has presented a White Paper on AI strategy in 2020) and by private actors (Microsoft recently published its second white paper in favor of “responsible intelligence”). TEHTRIS, which has made ethics a core value of its business, intends to use its AI technology to help secure cyberspace. The data feeding CYBERIA, and in particular its behavioral analysis technology (UEBA), is anonymized to guarantee the confidentiality of personal data.

Thus, the expansion and improvement of AI technology de facto increases the possibility of using AI for malicious purposes. This escalation creates the need to use AI defensively since an AI-powered offensive attack precludes a “human” response that would not be proportionate. Attacks, which will allow for more precise and sophisticated targeting, will be more impactful in both the reconnaissance and intrusion phases, benefiting from real-time context and behavioral analysis. In response, defensive AI can already analyze and respond instantly to any observed anomalous behavior and detect sophisticated threats. At the same time, the lack of data in cybersecurity, in this constantly evolving field, does not allow AI to fully develop on a stable data base. Thus, in cyber defense, AI must be used in addition to fundamental cybersecurity rules and other tools such as cryptography. Indeed, the AI vs. AI battle will revolve around the following issue: which AI will have more data to be better than the other? Benefiting from more than 5 years of research and development, TEHTRIS’ CYBERIA modules are based on rich databases that allow to constantly adjust and improve their use in the products that make up the TEHTRIS XDR Platform. For example, the TEHTRIS DNS Firewall incorporates a Deep Learning model that detects randomly generated domain names (RGDs) with 99% reliability and 100% detection of SolarWinds attacks.